Probabilistic Modeling for Human Mesh Recovery

Probabilistic Modeling for Human Mesh Recovery

Abstract

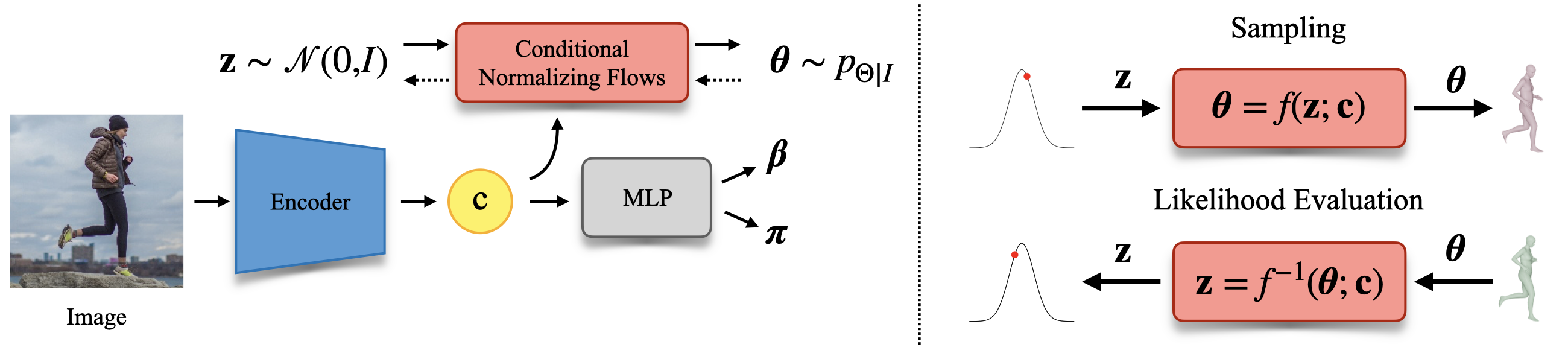

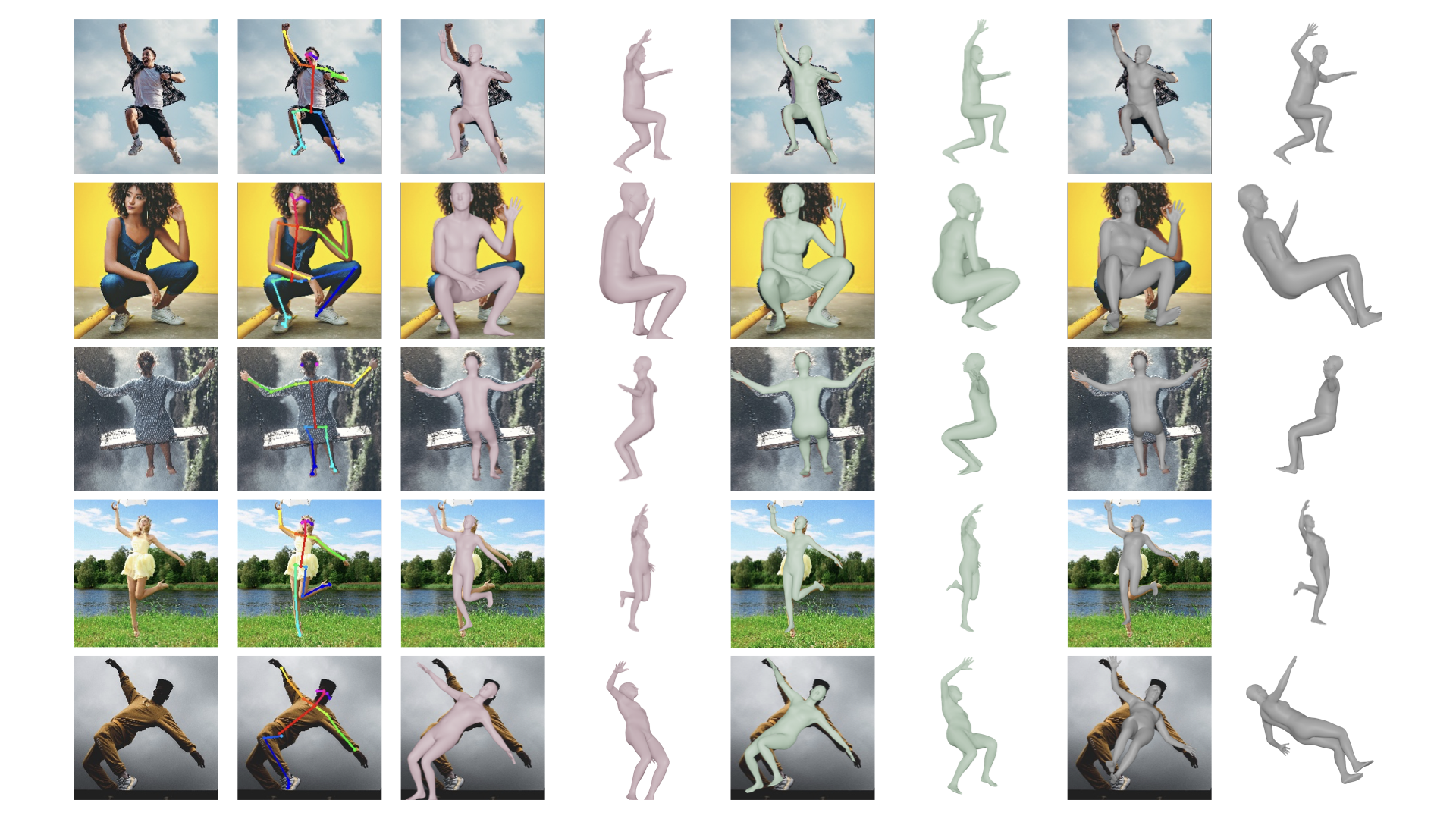

This paper focuses on the problem of 3D human reconstruction from 2D evidence. Although this is an inherently ambiguous problem, the majority of recent works avoid the uncertainty modeling and typically regress a single estimate for a given input. In contrast to that, in this work, we propose to embrace the reconstruction ambiguity and we recast the problem as learning a mapping from the input to a \textbf{distribution} of plausible 3D poses. Our approach is based on the normalizing flows model and offers a series of advantages. For conventional applications, where a single 3D estimate is required, our formulation allows for efficient mode computation. Using the mode leads to performance that is comparable with the state of the art among deterministic unimodal regression models. Simultaneously, since we have access to the likelihood of each sample, we demonstrate that our model is useful in a series of downstream tasks, where we leverage the probabilistic nature of the prediction as a tool for more accurate estimation. These tasks include reconstruction from multiple uncalibrated views, as well as human model fitting, where our model acts as a powerful image-based prior for mesh recovery. Our results validate the importance of probabilistic modeling, and indicate state-of-the-art performance across a variety of settings.

Overview

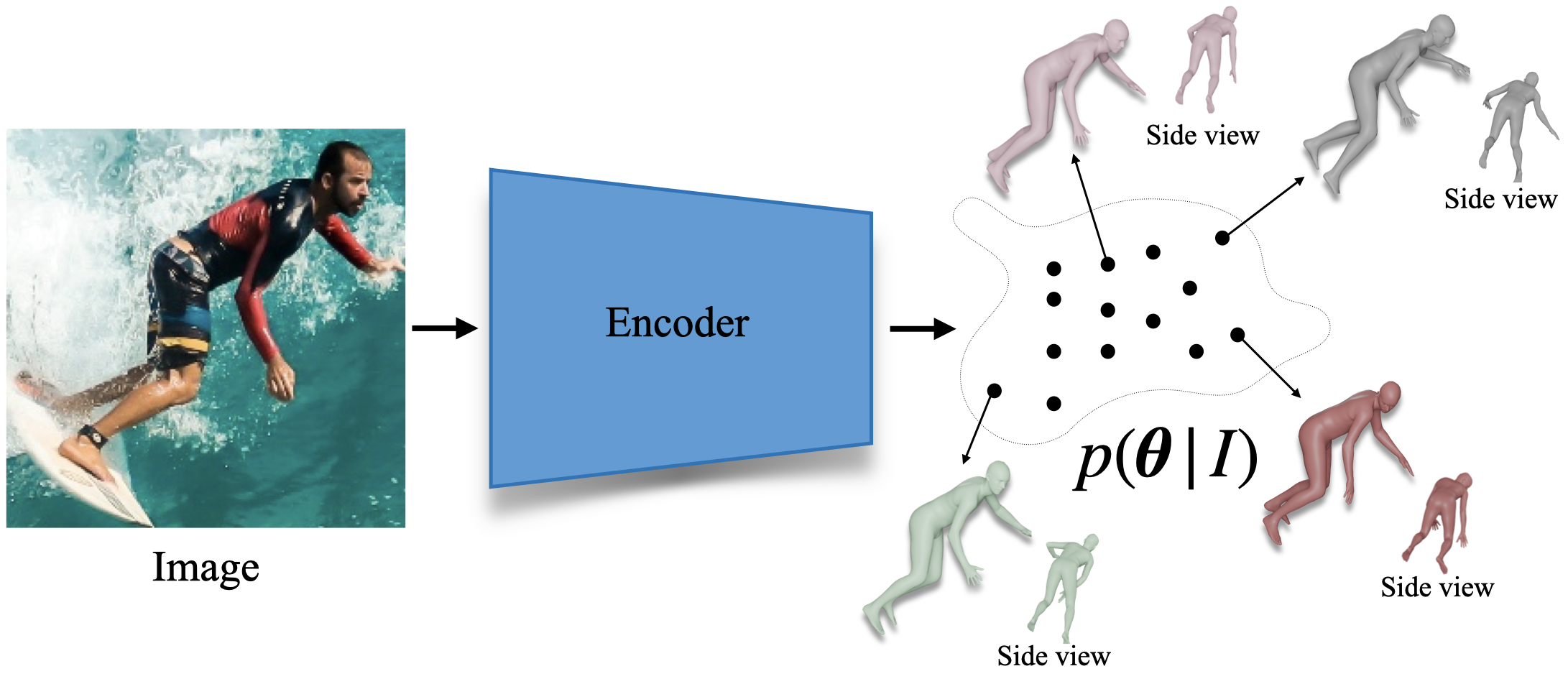

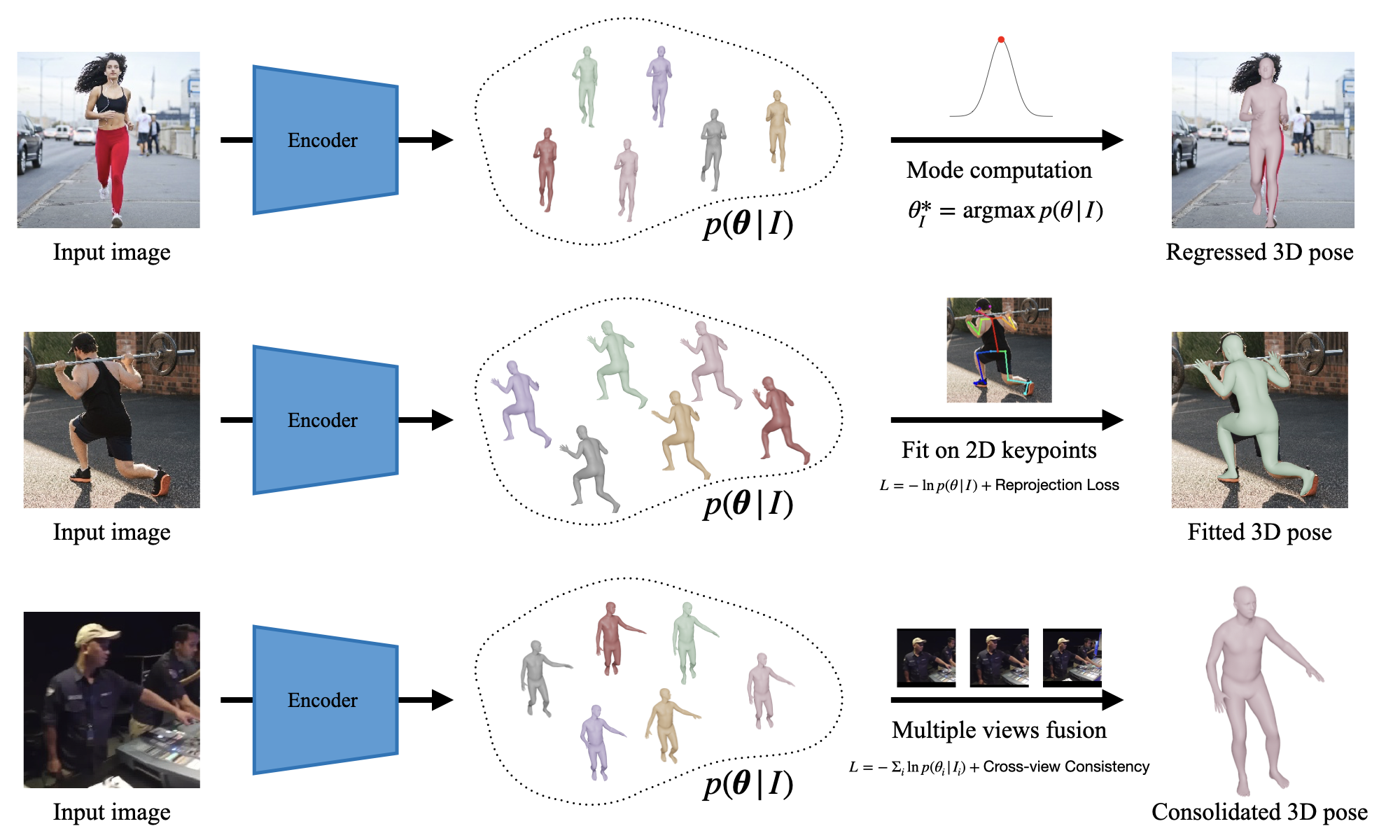

We propose to recast the problem of 3D human reconstruction as learning a mapping from the input to a distribution of 3D poses. The output distribution has high probability mass on a diverse set of poses that are consistent with the 2D evidence.

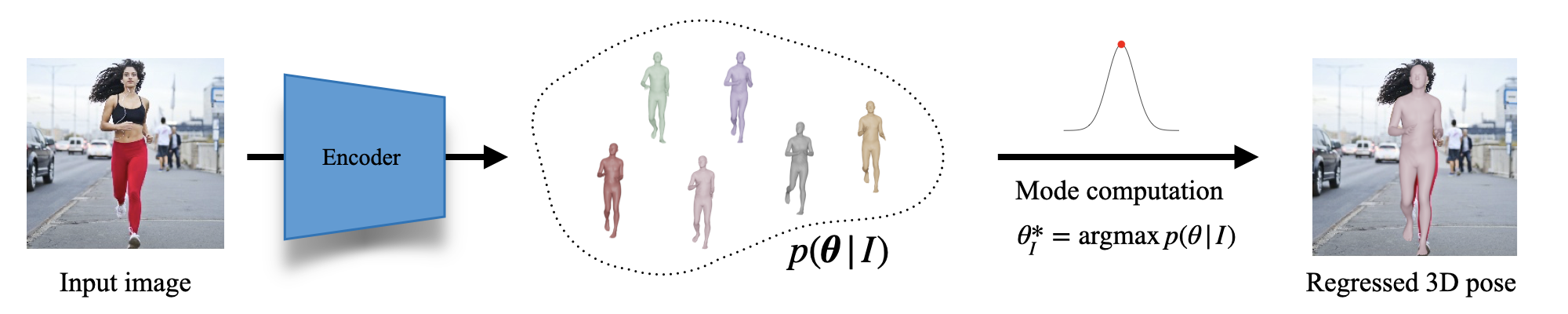

In the typical case of 3D mesh regression, we can naturally use the mode of the distribution and perform on par with approaches regressing a single 3D mesh.

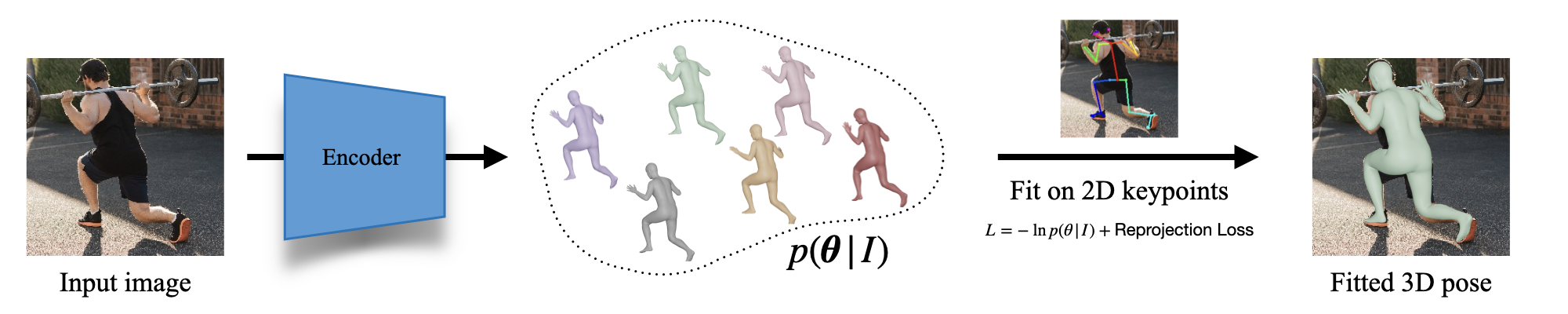

When keypoints (or other types of 2D evidence) are available we can treat our model as an image-based prior and fit a human body model to the keypoints by combining it with a 2D reprojection term.

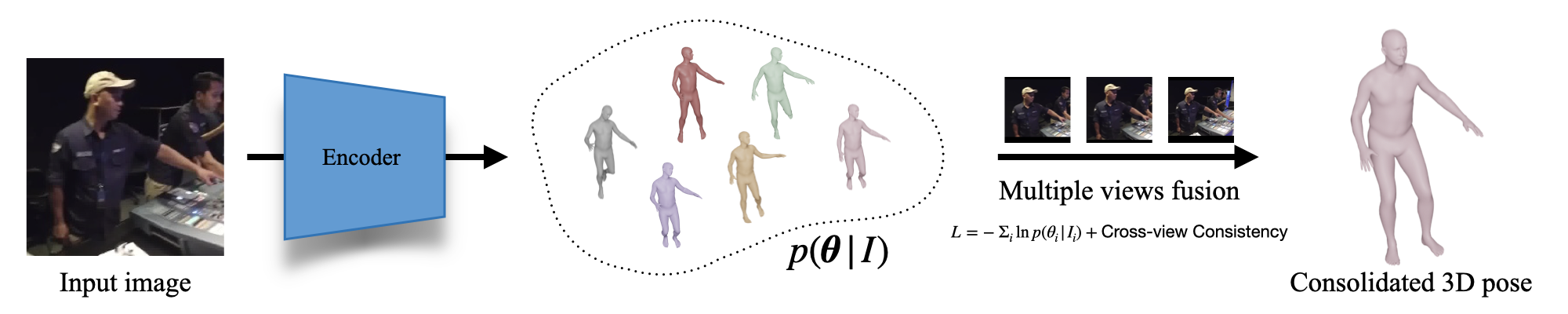

When multiple views are available, we can naturally consolidate all single-frame predictions by adding a cross-view consistency term.

Architecture

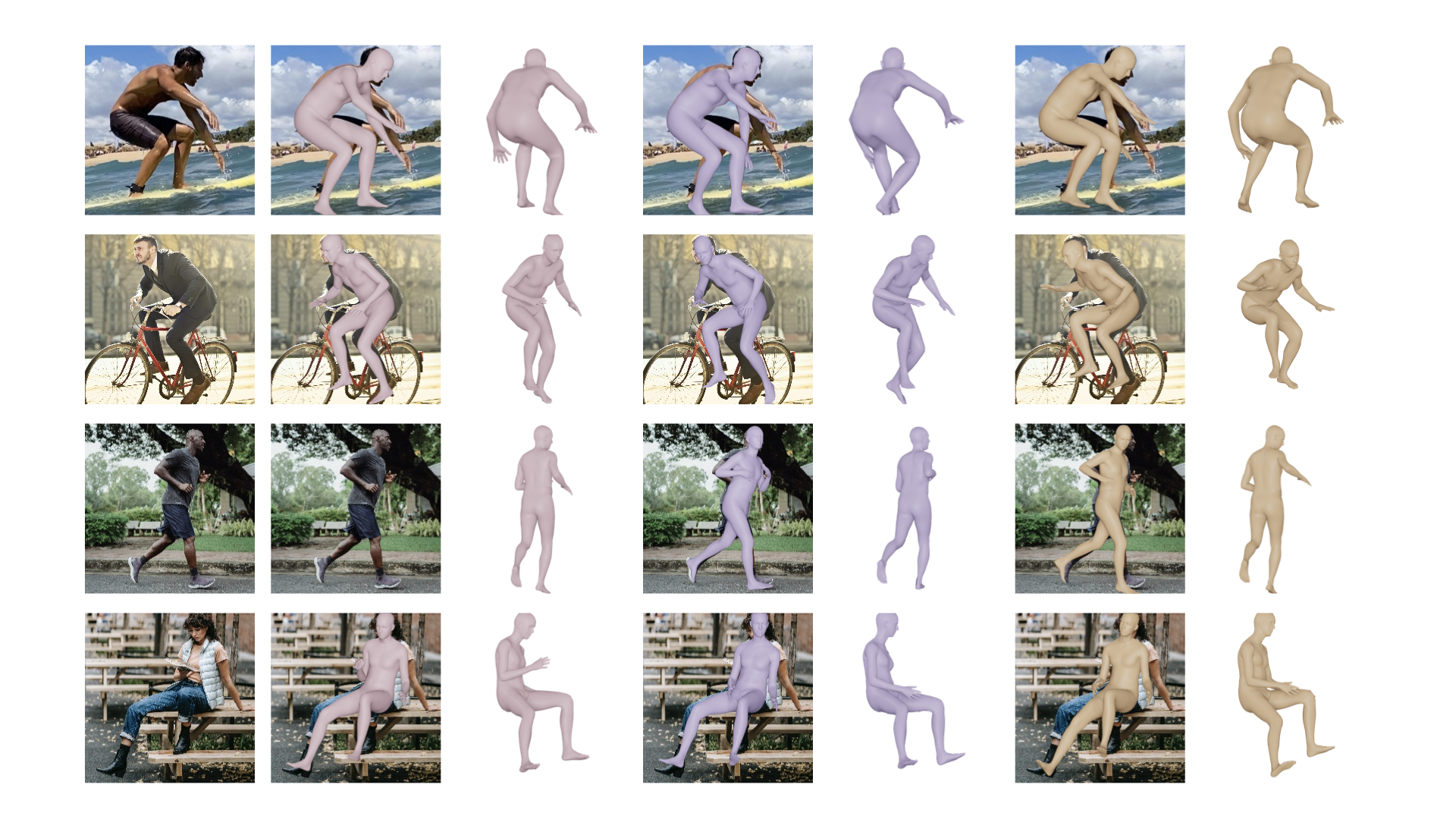

Results

Acknowledgements

NK and KD gratefully appreciate support through the following grants: ARO W911NF-20-1-0080, NSF IIS 1703319, NSF TRIPODS 1934960, NSF CPS 2038873, ONR N00014-17-1-2093, the DARPA-SRC C-BRIC, and by Honda Research Institute. GP is supported by BAIR sponsors.

The design of this project page was based on this website.